From AI FOMO to AI Strategy

On New Year’s Eve, I published a piece in Inc.com that I’ve been wanting to write for months: “How FOMO Is Turning AI Into a Cybersecurity Nightmare.” I wrote it because the security, legal, and governance risks aren’t hypothetical anymore. They’re showing up in breach notifications, tribunal rulings, and incident reports.

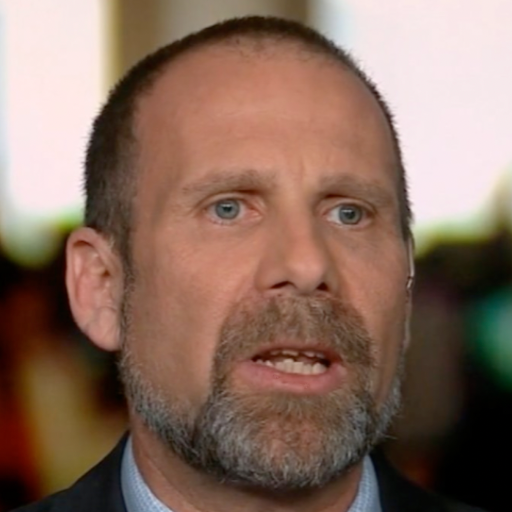

And it was prompted by a mid-2025 conversation I was having with a CEO client who told me, with complete confidence, “We need AI everywhere by Q4.” When I asked what problems they were solving, he said, “I don’t know, but all our competitors are doing it, and the board is giving me some real pressure.”

That’s when I realized we had crossed from innovation into something more dangerous.

Six months on, there is a consistent pattern: companies that rushed deployment are now dealing with consequences they never anticipated.

Most commonly in 2025, it was supply chain failures from AI vendors related not to the AI functions, but to the most basic information security configurations those vendors use to secure their products.

The Thing That Keeps Me Up at Night

Throughout 2024 and 2025, I’ve watched smart executives—people who would never approve a major technology deployment without proper due diligence—greenlight AI implementations based solely on fear of being left behind. Board members ask, “What’s our AI strategy?” Investors want to hear about AI-first initiatives. Competitors are plastering “AI-powered” across their websites.

The pressure is real. The Fear Of Missing Out is real. But what’s also real is that AI tools operate fundamentally differently than the enterprise software we’ve spent decades learning to secure.

Why This Matters More Than Most Security Issues

Here’s what makes the current AI security crisis different from typical technology risk: the vendors themselves have made it harder to assess what you’re actually buying.

When AI companies use terms like “red teaming” and “vulnerability management,” your security teams hear familiar language and assume familiar protections. But these terms mean completely different things in AI contexts. Traditional red teaming tests whether attackers can breach your systems. AI red teaming often just tests whether a chatbot will generate offensive content.

Both matter. But only one protects your data.

This linguistic confusion isn’t accidental, and it isn’t trivial. It’s creating a gap where executives think they’ve done due diligence when they’ve actually only scratched the surface.

The Non-Deterministic Elephant in the Room

The other issue that prompted this article is something most business leaders don’t fully grasp: generative AI is inherently unpredictable. The same input can produce different outputs at different times.

For some use cases, that’s fine. For others, it’s a liability waiting to happen.

I’ve seen companies deploy AI chatbots to answer customer questions about contract terms, product specifications, and legal policies without considering what happens when the AI gives wrong answers. Air Canada found out the hard way when a tribunal held them liable for their chatbot’s incorrect information about bereavement fares.

The question isn’t whether AI will make mistakes. It will. The question is whether your organization has thought through what happens when those mistakes occur in customer-facing or business-critical contexts.

What I Want Readers to Take Away

The article lays out a framework for thinking about AI security that goes beyond typical vendor questionnaires and compliance checkboxes. I wanted to give executives and security professionals a practical approach to:

- Asking the right questions before deployment

- Understanding what AI vendors really mean when they use security terminology

- Recognizing where non-deterministic behavior creates unacceptable business risk

- Building proper controls around AI tools that actually access your systems and data

The point isn’t to stop AI adoption. Several of my clients are seeing genuine business value from well-implemented AI tools. The point is that “well-implemented” requires a level of rigor that most organizations aren’t applying because they’re too busy trying to keep up with competitors.

FOMO Is Not a Strategy

What I really want executives to understand is that rushing into AI deployment because everyone else is doing it is the opposite of competitive advantage. It’s technical debt that compounds like high-interest credit cards.

The companies that will win with AI aren’t the ones who deployed fastest. They’re the ones who deployed thoughtfully, with proper foundations, realistic risk assessments, and systems that can scale securely.

Speed comes from discipline, not shortcuts. And right now, the AI market is rewarding shortcuts while punishing discipline. That won’t last.

Read the full article at Inc.com for the detailed framework, real-world examples, and specific controls your organization needs before your next AI deployment.